One question we hear a lot at Salience is “what do I index on my website?” – this simple question can often lead to extremely complex answers, especially in the context of large e-commerce websites.

E-commerce websites often require serious categorisation and user features to help customers find what they want easily. Enter faceted navigation…

What are Filter Pages and faceted navigation items?

Filter pages are primarily used to sort or narrow the content on the page. This categorisation and user preferences are often configured using faceted navigation, sometimes with thousands of different variations per category.

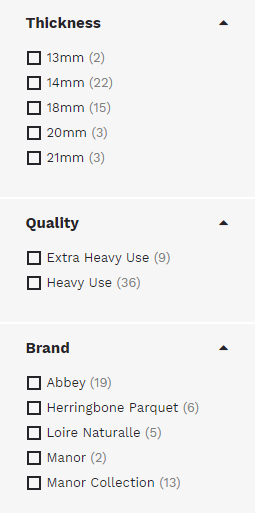

Typically, faceted navigation looks like this:

There are of course variations of this, but generally you will find faceted navigation on the left-hand side of a website with a selection of boxes to suit user preferences.

Why Filter Pages Cause Issues

As previously mentioned, we use filter pages to narrow and sort the page content

When a user input their desired preferences, filter pages will be generated. This changes the URL from something like:

https://example.com/gloves

to

https://example.com/gloves?colour=blue

This will not only change the URL, but also sort the page content to only show blue gloves. It is worth keeping in mind that several filters that can be applied to a single page.

If a user wanted to find Barbour leather gloves in blue for under £70 but more than £50, filtered by price high to low – we could potentially end up with a URL that looks like this:

https://example.com/gloves?brand=barbour&fabric=leather&colour=blue&min-price=50&max-price=70&sort=pricedesc

Here we can see how easily URLs can get out of control because if we were to break down those URLs, we can see there are many variants:

https://example.com/gloves?brand=barbour

https://example.com/gloves?brand=barbour&fabric=leather

https://example.com/gloves?brand=barbour&fabric=leather&colour=blue

https://example.com/gloves?brand=barbour&fabric=leather&colour=blue&min-price=50

and so on.

Although this is great for a user, being able to find exactly what they want, this can cause havoc for Google as each one of those URLs is a unique URL for it to crawl, understand and rank within the SERPs accordingly – if left unmanaged that is.

Each URL contains the same content as the original /gloves page, meaning this can cause huge amounts of duplicated content. This is a huge negative factor for websites that can really impact organic visibility.

Not only this, but a huge amount of unique filtered URLs will sap the crawl budget (a crucial factor for large websites, especially e-commerce), effectively meaning your website won’t reach its full ranking potential.

Pagination

Another cause of filter pages is pagination. Pagination is when a website divides content across several pages. In an e-commerce example, websites might use this to store products on other pages.

Paginated pages suffer from the same issues as faceted navigation, with duplicated content and poor crawl management.

A typical paginated page will look similar to this:

https://example.com/gloves?p=2

As a quick note, Google updated their documentation on how they treat rel=”prev” & rel=”next” for paginated pages. This was previously used to manage paginated pages, and although Google are good at recognising paginated pages, it is worth webmasters managing pagination – especially on websites of scale.

Why are Filter Pages an Issue?

Filter pages can become a huge issue on e-commerce websites especially due to the sheer volume of customisation. Take a look at this e-commerce store’s recognised pages:

This website doesn’t even have 2000 product pages yet is producing over 90 million pages – the majority of which are filter pages. If these weren’t managed correctly, Google would struggle to rank this website appropriately.

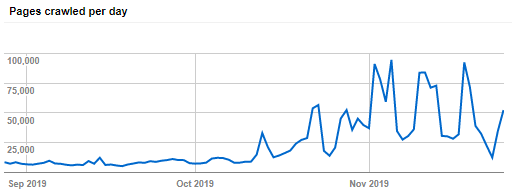

Crawl Budget & Filter Pages

Crawl budget is the number of pages Googlebot will crawl and index within a given timeframe. This can vary drastically from website to website.

The issue with crawl budget for e-commerce sites is that if Google doesn’t index a page, it isn’t going to rank. So, if your website’s pages drastically exceed your number of pages that Googlebot can crawl within the crawl budget allocated, your website will have pages that aren’t indexed at all.

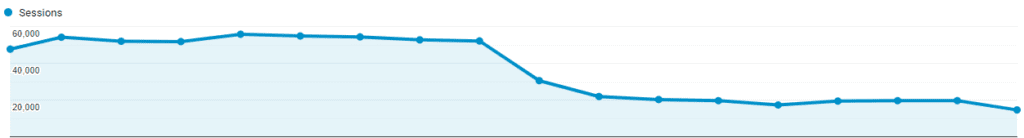

When the page that isn’t indexed is a major category page, this is when a website has some critical technical SEO issues that need urgent attention – as this could cause a huge dent in organic traffic.

Duplicated Content

Each URL with a filter attached to it is a unique URL in Google’s eye. So even though the page has been filtered (generally at a product level), the content on the filtered page is the same to that on the original. What this means is that your website now has duplicated content across many pages on your website – sending poor signals to Google.

Duplicated content presents three major issues for Google:

- Google are unsure which version(s) to include within the index, and which versions to exclude

- Off-site metrics such as links can get diluted or even lost as Google don’t know which page should be attributed the link value

- Google can struggle with deciding which version of the page to rank for specific search queries

Google expect a one-to-one pairing between URL and unique content. By having the same content on multiple URLs through filter pages, website rankings and performance can drop within the SERPs due to sending Google confusing signals.

Keyword Cannibalisation

Keyword cannibalisation is when multiple pages on a single domain are competing to rank for the same search query. This sends Google intensely confusing signals as it now has to decide which page should rank for said keyword – if any. As a result of these confusing signals, the “wrong” page can often end up ranking for this term instead of the desired page.

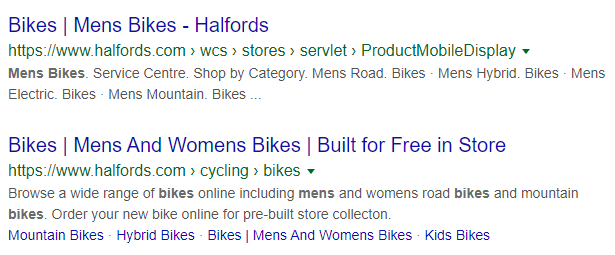

An example of this would be Halfords with the term “Mens Bikes”:

Here we can see that they have two pages ranking for the term, one of which is the men’s bike page, whilst the other is a generic bikes page. Clearly, the first result serves the search query with higher accuracy than the second result. But due to cannibalisation, they are both ranking for the same search query – this could indicate that the bikes page isn’t performing as well as it should.

However, Halfords in this case are lucky that both results are shown as it is very common within the SERPs that just a single URL is shown, which if incorrect, can lead to extremely poor performance for that search query.

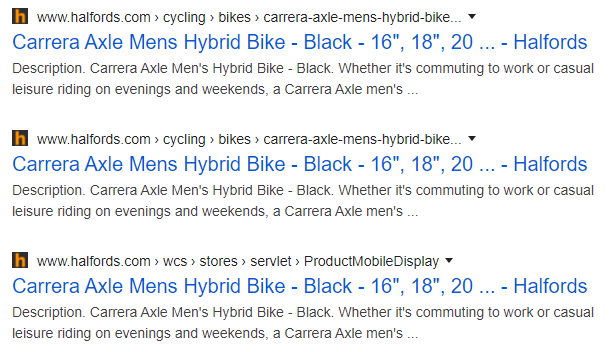

Furthermore, a quick search shows that they also suffer from filter and parameterised pages being indexed when they shouldn’t be:

Even large websites get the fundamentals with filter pages wrong!

Fixing the Issues

Using Crawl Budget Effectively

Optimising crawl budget can be done through a variety of ways, including improving page speed and only serving Google high quality pages with unique content.

The benefit of fixing these issues is that you will often find Google will visit your website more often and crawl more pages on your website – resulting in an overall increase of crawl budget.

As the number of pages being crawled is consolidated to just the high-value ones and not the filter pages, it is unlikely that there are going to be important pages that aren’t getting picked up within the index. Meaning your website should have an extremely high indexation rate on Google.

This benefit is two-fold as Google will now be able to accurately see what pages are important and rank them accordingly (and generally in a better position), as they aren’t battling against similar filter pages.

Google have created a great beginners resource on this topic here.

Optimising Faceted Navigation

By optimising the faceted navigation, a website can fix a myriad of different issues – including:

- Duplicate content

- Crawl waste

- Keyword cannibalisation

- Link equity distribution

When we are deciding on how to properly optimise a faceted navigation, we look at the useful pages that we do want to index, and to minimise the number of low value pages that we don’t want to index.

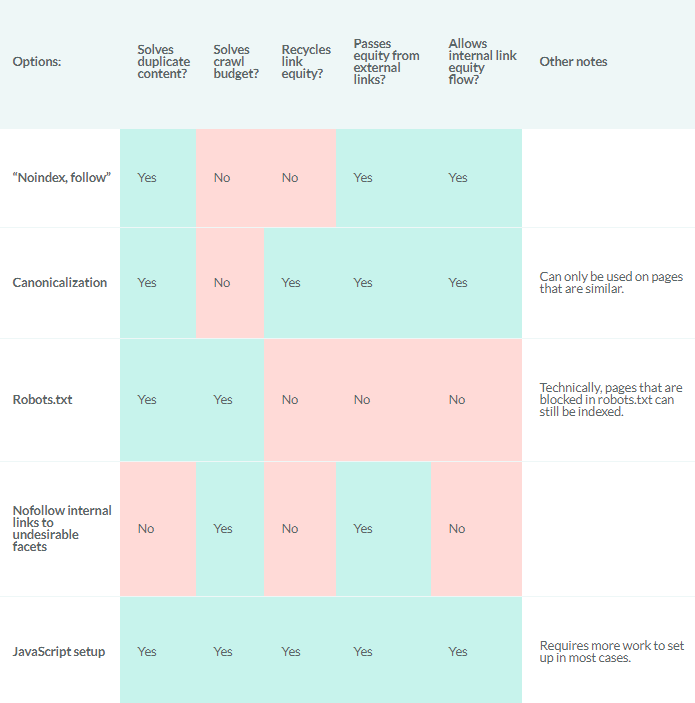

The most commonly used methods are:

- “Noindex, follow” – This is when we tell Googlebot not to index specific pages, but to continue to crawl the filtered pages

- Canonicalisation – This is essentially telling Google that we have a preferred version of the page that they are on – such as the filter page canonicalizing to the non-filtered version

- robots.txt – This tells Google not to visit selected parts of the website (i.e. parameterised pages)

- “Nofollow” internal links to undesirable facets – This tag tells Google not to visit facets that we deem unimportant for them to crawl

- JavaScript setup – This is when a faceted navigation is built in a way that limits URLs being changed – using only a single URL if possible

Moz have a great resource on the points above:

Although listed above, we would generally recommend against using the robots.txt to optimise a faceted navigation as we commonly find vast numbers of pages “blocked” in the robots.txt appearing within the SERPs.

It is worth noting that there is no one solution that will work for your site, as every website has its nuances, obstacles and differences to consider.

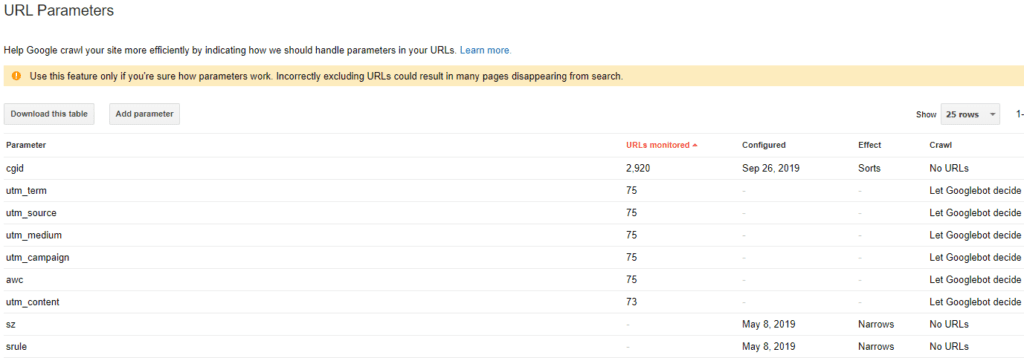

URL Parameter Tools

Finally, we can also use the URL parameter tool. This is a very nuclear option but can work extremely effectively in removing pages from the index and stopping Google from crawling selected parameterised pages.

However, we would advise a word of caution when making changes here as a misconfiguration could eliminate a huge chunk of organic traffic – therefore this tool should only be used when you are certain of the impact it will have on the website.

By removing parameters that we don’t want to be indexed, we will be reducing the number of pages that Google crawls, which as previously mentioned, optimises the crawl budget for your website. Once again, this will boost the overall organic visibility on your website as Google are finding the key, high value pages easily, without being bogged down by filter pages.

Concluding Points

Filter pages can have a huge negative impact on websites if not handled properly, with the potential for pages to increase exponentially when additional filters are implemented onto the navigation. All of which will duplicate content, waste crawl budget and generally send the wrong signals to Google.

However, filter URLs can be managed so they don’t hinder organic performance.

Whilst we have stated some of the methods that can be used to optimise filter pages and faceted navigation, every website and platform will be different. Therefore, it is important to take time to assess the situation case by case, as there is no one-size-fits-all approach to managing filter pages.

All sounding a little too technical? Let our SEO team do the hard work for you – contact us for more information today.