Sometimes your data in Google Analytics is not always as authentic as it seems at first glance.

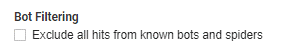

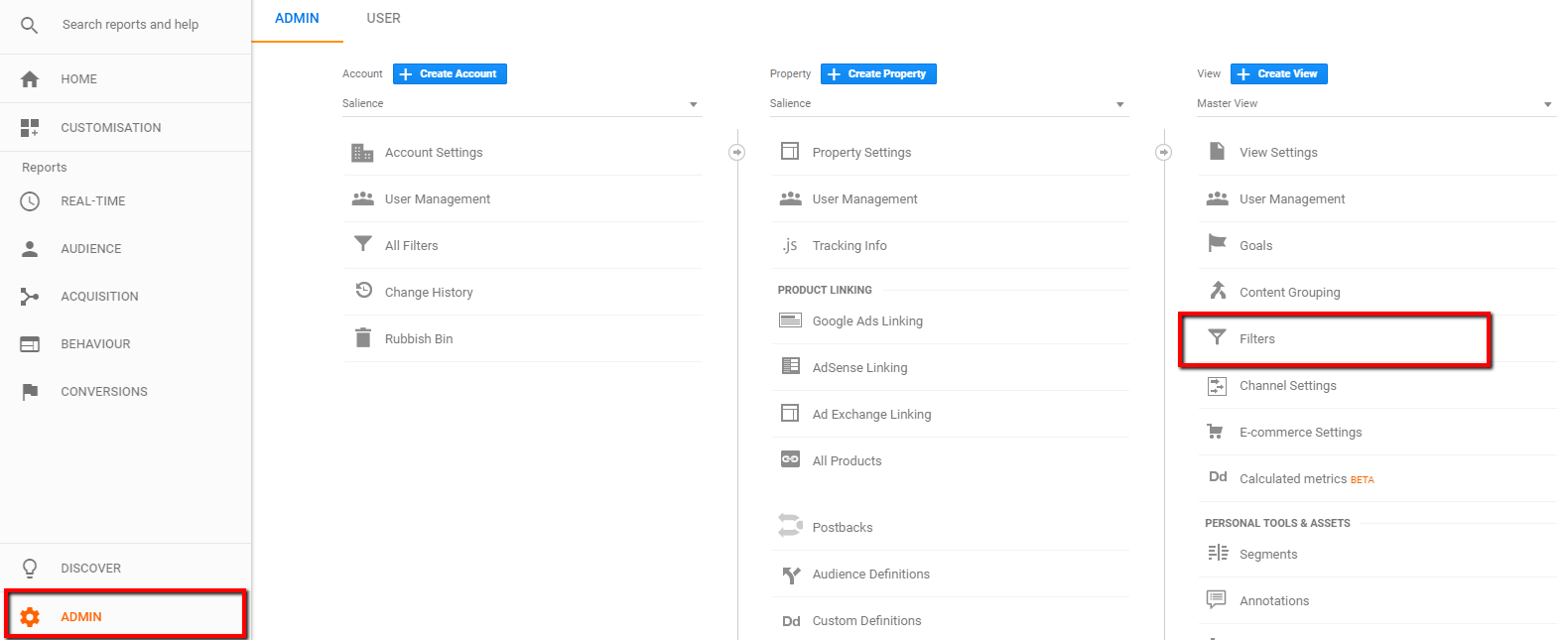

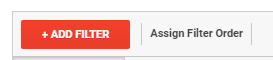

One of the bigger data integrity issues that can occur is traffic that comes from spiders and robots to your website. This traffic is not legitimate but can often appear otherwise.

Bot traffic is not always malicious, but it is useless and adds absolutely no value in terms of Google Analytics; in fact, having this in your account can skew your real data quite significantly.

There is no catch-all for eliminating ‘ghost’ or ‘spam’ traffic in Google Analytics, but here we’ll look at some of the more common ways of spotting these pesky bleeders in your account and some quick tips on how to turf ‘em out.

How do I spot bot traffic?

Most of the time, fake traffic has attributes that make it easy to spot.

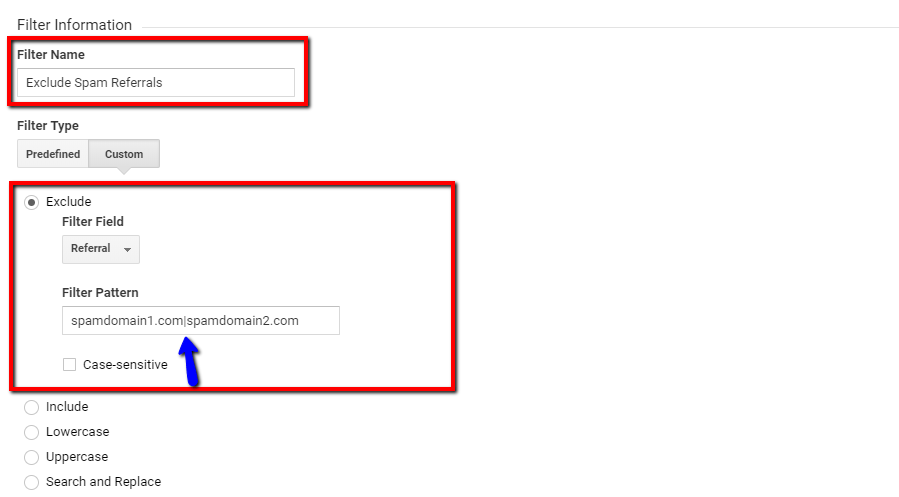

As I alluded to in a previous article, a sudden increase in direct or referral traffic would be a huge warning sign that there’s been some foul play.

Of course, that’s not to say this is where the investigation ends – this traffic may be legitimate as a result of marketing activity or seasonality. However, if this increase is unexpected you would want to follow this up with some investigatory work.

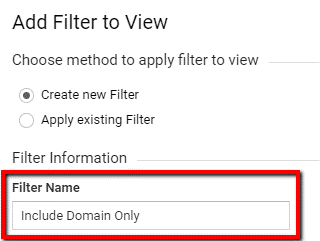

One of the starting points I generally use during an investigation is the ‘Hostname’ report (Audience > Technology > Network > Hostname)

![]()

Try this out for yourself. Are there any strange looking domains in here? Ideally, the only domain in this report should be the domain of your website(s) – anything other than this is likely going to be illegitimate.

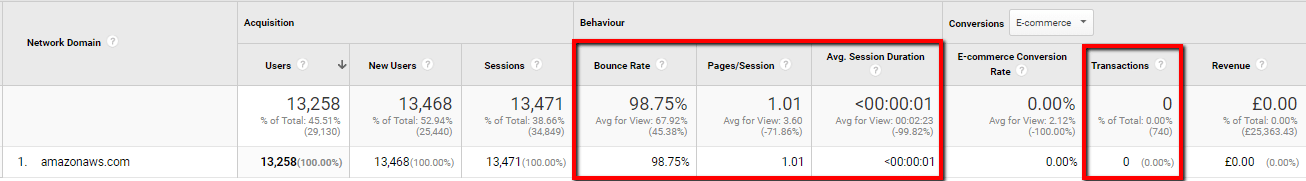

Another good dimension to use is ‘Network Domain’ – again, check if there are any network provider domains here that look suspicious. ‘Amazonaws.com’ is a well-known bot crawler that I’ve seen wreak havoc across many accounts.

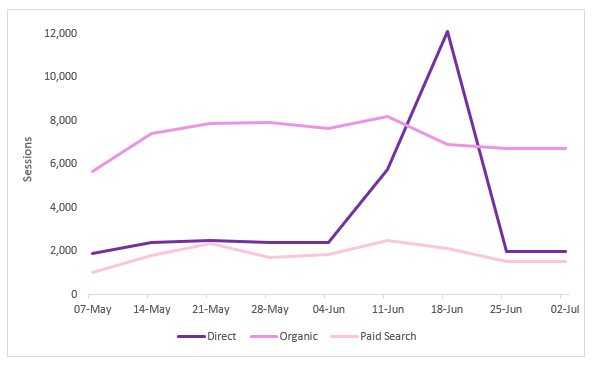

Spam traffic can be sussed out using metrics too. Engagement stats will look peculiar: extremely high bounce rates, low pages/session and average session duration metrics, like in the screenshot below, are a good indicator of bots. After all, the intention of the bot is just to crawl the site and leave its data behind. It would not interact with the site as a real person would.

E-Commerce/Goal Conversion data is also a good reference point for this; if a particular dimension has a high amount of sessions but absolutely no conversions, this could well be spam.

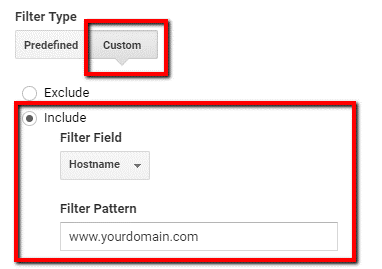

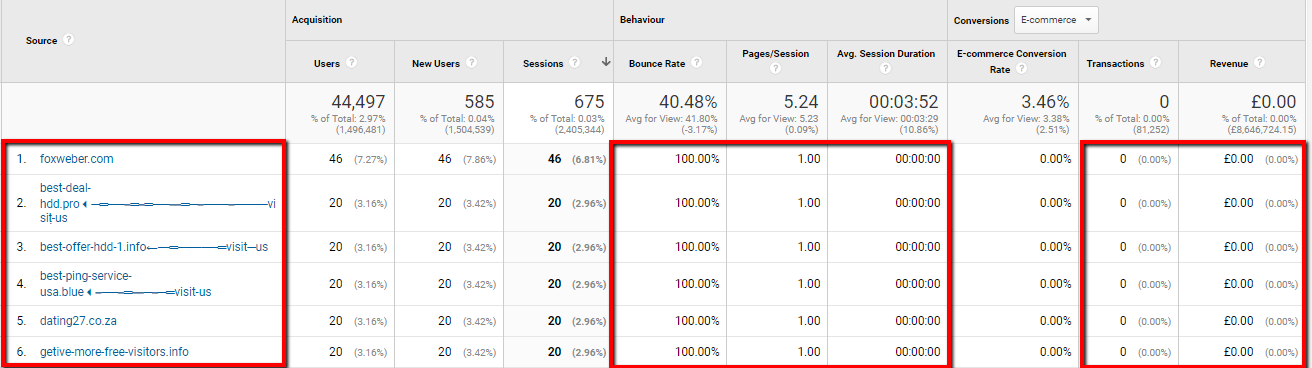

You can also see traces of spam in the Referral channel. Referral spam is a bit more sleuth-y than real bots as they attempt to mask their identity by using fake referrer headers – usually the name of the domain that they want to promote. Go to your list of referrals (Acquisition > Channels > Referrals), sort the bounce rate column low to high and you will likely see some suspicious domains, like the ones below:

Fake traffic is not always obvious, but try using all of the points above in combination to catch it in the act. Check through your list of sources for unusual looking domains, apply secondary dimensions for extra analysis and look at those engagement metrics closely.