One of the first steps to getting more organic traffic via SEO is making sure Google can actually understand your site.

If Google can’t scan your site effectively, you can kiss your SEO traffic goodbye.

We know this is not what you want, so in this post, we’re going to explain exactly how Google crawls and reads your website.

With this information, you can create content and site architecture that Google loves so that you can get more visibility in the search results.

Let’s dive straight in.

Jump to:

- What are the core functions of a search engine?

- How can you help Google crawl your site?

- How does Google parse your site?

- How does Google index the web?

- How does Google read your site so you can start ranking?

- Can Google read backlinks?

What are the Core Functions of a Search Engine?

Before we get started with the complexities of the maths that goes behind the way Google reads your page, I want to first explain the three main purposes of a Search Engine bot.

In general, it is agreed there are three key areas that search engine focus on:

- Crawling

- Indexing

- Ranking

All these elements play into the way Google reads your site. So, let’s explore them.

Crawling the Web (With Their Creeping Robot Spiders)

Although you can’t see Google crawling the web, in the background, there are a load of secret spiders scanning every web-based asset. For all the arachnophobes out there, you need not worry! These robots can’t harm you.

What they do is crawl around the web and find fresh, recently public content. They do this by landing on a URL (aka, your site) and hopping through all the different links to various pages.

(This is why it is important to have an easy site architecture, but more on that later).

It then caches the pages it finds on your site so they can be loaded up when a user finds you on the SERPs.

Pretty high-tech stuff, am I right?

Now, let me make something clear. Yes, Google is the all-seeing, all-powerful supercomputer and its pretty good at finding your pages. However, from time-to-time, it can struggle.

Here’s a quick bird’s eye view of why Google hasn’t found your site:

- Your content is hidden behind a login page

- Your content is hidden behind a contact form

- Your content is not linked to your navigation or internally linked from other pages

- You have code on the page blocking crawlers

- You have issues with your robot.txt file

These are but a few of the issues a crawler can face when it comes to finding your website.

They’re simple but common.

How Can You Help Google Crawl Your Site? (Hint: It’s All About Architecture)

Whether you like spiders or not, you need them crawling your site (the friendly robot ones at least). So, what’s the best way to help them find all your important pages?

The answer:

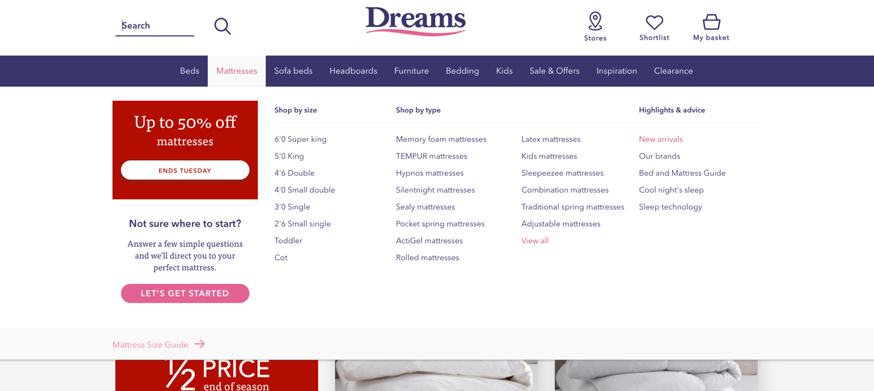

Sorting out your navigation!

Just like an architect would build a house optimal for functionality and space, you need to build a website that is optimal for crawlers.

You don’t want to have these poor spiders confused, running in all directions struggling to find your pages.

The trick is to build a tight site architecture that allows important pages to be found in 1-4 clicks. You can achieve this through category pages and frequent internal linking.

And don’t make your users think! Keep your site design simple and intuitive, so your users don’t have to think too hard about how to work their way around.

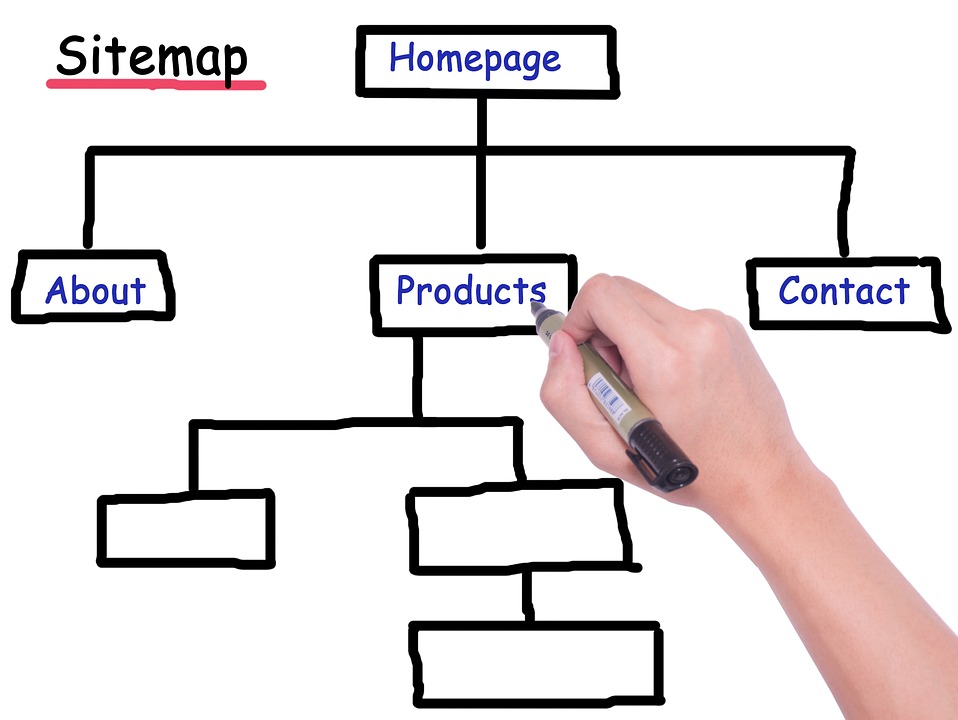

What About Sitemaps? Can’t I Just Submit One and Be Done With it?

Yes and no.

Sitemaps are a traditional way of helping Google crawlers to find your important pages. They can be submitted directly to Google and can speed up the entire process of indexation (discussed later).

However, they don’t negate the need for a main navigation.

Sitemaps are helpful if you don’t have any links point to your site which Google can follow. So, they tend to be more useful for newer sites that have stumbled their way onto the big scary internet.

Saying this, manually submitting your sitemap won’t guarantee your site will be indexed!

Google got smart to Blackhat webmasters submitting their sitemaps to forcefully index their sites. They’ve finally put their foot down with this. However, if your site is high quality and isn’t sending any negative signals, this can be an effective way to get your pages crawled and indexed quickly.

How Google Parses Your Site?

On a quick note, it is worth talking about how Google actually parses your site.

This might seem like complicated technical jargon but it’s fairly simple to understand. When Google parses a site, it essentially breaks it down and rebuilds it using the instructions given from the various code on the site.

Some code it can read fast, such as lightweight languages like HTML. Others, such as Javascript, are read slower as they tend to be slightly heavier.

There is some speculation to how Google exactly parses the code and renders sites. They like to keep it close to the chest.

However, we do know that if you have excessive Javascript and other heavy code on your site that covers content, Google will struggle to read it. So, keep it to a minimum.

How Does Google Index the Web (And Make You Visible to Searchers)?

You’ve probably seen me mention the term ‘indexing’ a fair amount now throughout this article and some of you may not know what it means.

So, let me spend a short while explaining it to you and how Google uses indexing to make sure your site appears in the search engine results pages (SERPS).

Indexing is a fancy word for ‘storing’ because that’s exactly what Google is doing when they index your site – storing it on their database.

When Google finds your site one of their crawls (which are constantly happening by the way), if it meets their criteria as a healthy, helpful site, they will include it in their index.

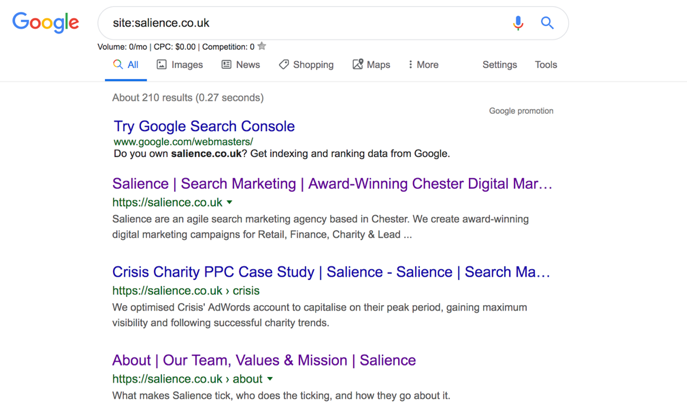

Checking whether your site in the Google index is fairly simple. You simply have to use Google themselves with advanced search parameters.

Go to Google and type in “site:[your site]” and all the indexed pages will come up.

Although this isn’t always the most effective way to check, it is the quickest.

A more efficient way to check your indexation is through Google Search Console. Here you can all sorts of information about your site – it’s a very useful tool indeed.

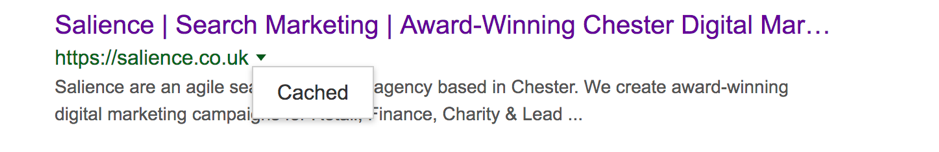

You can also see how Google has cached your site on its last crawl!

To do this, click the green arrow next to your domain and click “cached”.

From here, you can see how Google has captured your site.

Why Isn’t My Site in Google’s Index?

Google doesn’t always index everything it finds. Not all website pages make it onto their index.

Sometimes it is just by rotten luck. Other times it’s because there’s an error on the page or it doesn’t look like a high-quality site.

But what errors can stop a page from being indexed?

In general, 4xx or 5xx codes can create problems for crawlers and will result in the page being deindexed as a result.

4xx and 5xx errors are when either the page cannot be accessed, or the server isn’t responding. In either case, these issues need to be resolved; otherwise the page won’t be indexed.

A simple 301 (permanent) redirect will work perfectly for 4xx error, but for 5xx, you may have to contact your host.

Blackhat and Indexation

If Google notices something “fishy” with your site, you may struggle to get it indexed.

Google is getting smarter every day, especially with their new AI Rank Brain, so tricking the computer is becoming more and more difficult.

As a result, if you are currently diving into the world of Blackhat with keyword stuffing, link cloaking, and PBNs, you might struggle to get a place on the SERPs.

The takeaway message?

DON’T USE BLACKHAT!

How Does Google Read Your Site so You Can Start Ranking?

“All this information about Crawlers, spiders, and indexation is fascinating Lewis, but how does Google read my site so I can rank better?!”

I hear your cries, don’t worry.

But it’s important to get the foundations right; otherwise, you won’t be found by Google (yikes).

However, now you understand that section, let’s dive into how Google reads your site so it can rank you.

And yes, this is the juicy bit, so pay attention.

Content is King… Sorta

You may have heard the phrase “content is king” getting thrown around the SEO community.

The phrase isn’t exactly wrong, but it isn’t completely true either.

Content is what should make up the bulk of your website as it is what Google can quickly understand.

With software such as RankBrain, Google has become very intelligent when it comes to recognising words and written language. Even without RankBrain, Google has a number of methods it uses to read a piece of content.

Here are a few.

Co-occurrence and semantics

Google, not too long ago, released a research paper indicating they use co-occurrence in their efforts of understanding content. This suggests Google utilises words that relate to the main keyword of the page. Primarily, this is to help the speed of the crawling, as Google can then match up different words and associate them to the overall theme of a page. For example, on a page about Richard Branson, there may be words like “business”, “Virgin”, or “Airline”. In the community, these are sometimes referred to as LSI keywords (latent semantic indexing). They are keywords that aren’t synonymous but ones that are related.

TF-IDF

Don’t let yourself get scared away by the complicated nature of this abbreviation. TF-IDF stands for Term Frequency-Inverse Document Frequency. It’s a big fancy word to describe the importance Google gives words that appear frequently on the page. In a nutshell, Google gives less priority to keywords that appear too frequently in the text. This was probably implemented to prevent keyword stuffing during the OG days of Blackhat.

Synonyms and phrase-matching

Unfortunately for Google, language is very fluid and constantly changes. Therefore, Google has to keep up by grouping similar words together, and it does this through synonyms. By doing this, Google can read a page and understand the context of the words, opposed to seeing floating words that have no meaning.

What Else Do They Use?

Google also uses images and title tags to create an impression of your page when reading it.

It’s a pretty comprehensive software if you haven’t noticed already.

However, there are ways you can help Google read and understand the content on your page. One of those ways is Schema.

Schema allows you to tag different sections of content and inform Google on what they’re on. This means that Google has more of a chance at understanding exactly what the page is about.

In addition, if you mark up your content with schema properly, you can also bag yourself a featured snippet at the top of Google (if you’re on the first page).

It’s a relatively new concept that is still being experimented with.

We do have a comprehensive schema guide for ecommerce though if you’re interested!

Either way, by producing content that is easily readable from Google’s end, you can give yourself a boost in the rankings.

However, as indicated by the subheading, content by itself is not the only factor influencing whether you get a first-class ranking.

So Why Isn’t Content the Supreme King?

Content is important, yes, but it isn’t the only key factors Google reads when determining how they’re going to rank your site.

Introducing RankBrain and UX!

If Google had a God, it would definitely be user experience.

In the past few years, Google has put more effort into implementing UX metrics into their ranking algorithm than ever before.

Arguably, they are now using click-through rates and dwell time as ranking signals, so making sure users are finding your content and actually staying on it is just important as writing the content in the first place.

This means you could have a huge piece of content, stuffed with relevant keywords and semantics, but if users are bouncing off your page, Google will slap you down the SERPs.

However:

A lot of these findings have come from correlational studies from the likes of Rand Fishkin and Larry Kim. Nothing has been confirmed by Google for definite.

Nonetheless, there are signs Google is using user experience and engagement as a signal in their algorithm, so you need to be catering content to not only offer answers but also keep users on-page.

This is also mean optimising your meta tags to improve CTR.

Can Google Read Backlinks? (The Truth about Off-Page SEO)

Back in the day, backlinks were everything.

You could literally write a 300-word article and spam it with 100s of comment and forum links, and you’d be at the top of Google by the end of the day.

Now, however, things are a little different.

Even with thousands of backlinks pointing at your site, you can still struggle to rank!

Why is this the case? What happened to the quick ranks?

Unfortunately, Google changed their algorithms, and now backlinks aren’t what they used to be. These days, you not only need volume but quality!

Don’t get me wrong…

Backlinks are still incredibly important, but when compared to content and UX, they’ve been somewhat been left in the dust.

There are a million and one ways to build backlinks, but without solid content, you won’t get very far.

Aim to produce high-quality pieces of content that can be linked to and shared among the community they represent.

The days of rapid backlink production are long gone, so focus efforts on the long-term.

Wrapping It All Up

So there you have it.

A complete guide on how Google reads your site.

Here’s a quick recap:

- Google crawls pages around the web and will try its best to crawl all of your site (unless you’re not helping it!)

- Google, if it likes your site, will index you and start showing you in their SERPs (Yay!)

- If you want to start ranking, you need to write content that is easily read by Google and help it out using Schema

- Don’t forget to account for UX and user engagement, as RankBrain is forever watching

- Backlinks are important and you need them to rank. However, focus your efforts on awesome content and the backlinks will come naturally

Hopefully, you learnt a thing or two from this guide.

If you need any help with your SEO, feel free to reach out!