Introduction

The way we search for information is evolving rapidly. For years, Google has dominated online search, offering expert-backed results on everything from mattress recommendations to health advice. But with the rise of AI-powered chatbots, people are turning to AI assistants like ChatGPT, Gemini, Claude, Perplexity, and DeepSeek AI for quick, conversational answers.

This shift raises a crucial question: Which platform provides the most trustworthy, detailed, and user-friendly information about sleep and mattresses?

In this experiment, we’ll compare the responses from multiple AI tools with Google’s search results to evaluate which platform offers the most reliable, detailed, and user-friendly insights on sleep and mattresses. Can AI ever replace Google? Let’s find out.

The Experiment

Platforms Tested

We compared responses from:

- Google Search (the long-standing industry leader)

- ChatGPT (OpenAI’s conversational powerhouse)

- Gemini (Google’s AI-driven search alternative)

- Claude (Anthropic’s safety-focused AI assistant)

- Perplexity AI (an AI-powered search engine)

- DeepSeek AI (China’s latest AI model)

Step 1: Selecting Mattress-Related Queries

We carefully selected four highly relevant and frequently searched mattress-related queries that directly address key consumer concerns. These questions align with the decision-making process of potential buyers, ensuring the relevance of our experiment. The selected queries are:

- What’s the best type of mattress for back pain?

- How often should you replace a mattress?

- What are the pros and cons of memory foam mattresses?

- How do hybrid mattresses compare to spring mattresses?

These four queries were selected because they represent high-intent informational searches—questions that show clear consumer intent and are frequently asked by people researching mattress options. By addressing these queries, we ensure our experiment closely mirrors the types of searches that lead to actual purchases, helping us evaluate how effectively AI tools and Google serve these specific user needs.

Step 2: Collecting Responses

To ensure a fair and comprehensive comparison, we systematically gathered responses from multiple sources. For each query, we:

- Consulted AI-Powered Chatbots

- Directly queried ChatGPT, Gemini, Claude, Perplexity, and DeepSeek AI to evaluate how each AI tool interprets and responds to mattress-related questions.

- Noted differences in depth, accuracy, tone, and structure of responses.

- Assessed whether responses were fact-based, user-friendly, and aligned with authoritative sources.

- Analysed Google’s Organic Search Results

- Performed a manual Google search for each query to examine the top-ranking organic results.

- Identified patterns in content structure, credibility, and source authority (e.g., were top results from mattress brands, medical professionals, review sites, or independent bloggers?).

- Checked whether the Google-generated “Featured Snippets” or People Also Ask (PAA) boxes provided quick and reliable answers.

By applying a structured approach to collecting and evaluating responses, we ensured that our experiment provides meaningful insights into the evolving landscape of online search and AI-driven information retrieval.

Step 3: Evaluation Criteria

To objectively assess the quality of responses, we developed a 10-point scoring system based on key attributes that contribute to a valuable user experience. Each response was rated on the following criteria:

Scoring Criteria (Out of 10)

- Depth of Information (3 points)

- Does the response comprehensively answer the query?

- Does it cover multiple aspects (e.g., benefits, drawbacks, alternatives)?

- Is the explanation factually accurate, nuanced, and aligned with expert insights?

- Clarity & Readability (2 points)

- Is the answer well-structured, easy to follow, and free from jargon?

- Does it provide a concise yet complete response that caters to both quick readers and in-depth researchers?

- Authority & Sources (3 points)

- Does the response reference authoritative sources, such as medical professionals, industry experts, or research studies?

- For AI-generated responses, does the tool mention its data sources, or does it provide unverified claims?

- For Google results, do the top-ranking pages come from trusted domains (e.g., government, medical, or well-established industry sites)?

- Usefulness & Extra Features (2 points)

- Does the response enhance the user experience with added value elements such as:

- Summaries or key takeaways for quick skimming.

- Bullet points, tables, or comparisons to aid understanding.

- Related questions or suggested next steps to deepen exploration.

- Does the answer address user intent (e.g., product recommendations for buyers, scientific explanations for researchers)?

- Does the response enhance the user experience with added value elements such as:

By applying these rigorous evaluation criteria, we aimed to determine which platform—AI chatbots or Google—delivers the most reliable, actionable, and user-friendly information for mattress-related queries.

Results & Analysis

And the results are in! After analysing all the responses, here’s how each platform performed:

AI Response Ratings (Out of 10)

| AI Model | Depth (3) | Clarity (2) | Authority (3) | Usefulness (2) | Total (10) |

| 3 | 2 | 3 | 2 | 10/10 | |

| Perplexity | 3 | 2 | 2.5 | 1.5 | 9/10 |

| Gemini | 2.5 | 2 | 2 | 2 | 8.5/10 |

| ChatGPT | 2.5 | 2 | 1.5 | 2 | 8/10 |

| Claude | 2.5 | 2 | 1.5 | 1.5 | 7.5/10 |

| DeepSeek AI | 2 | 1.5 | 1 | 1 | 5.5/10 |

Key Insights:

- Google (10/10): The best overall, combining depth, sources, and extra search features.

- Perplexity (9/10): In-depth information and sources but lacks full authoritativeness.

- Gemini (8.5/10): Well-structured and visually useful, but authority varies.

- ChatGPT (8/10): Easy to read and structured but lacks diverse sources.

- Claude (7.5/10): Balanced but missing strong citations.

- DeepSeek AI (5.5/10): The weakest, needing more detail and sourcing.

A Detailed Breakdown of the AI and Google Responses

What’s the best type of mattress for back pain?

- Google: A standard SERP with no AI-generated overview. However, the People Also Ask section provided helpful related questions for further exploration.

- ChatGPT: Delivered an easy-to-understand breakdown of different mattress types, highlighting key considerations. External sources were mentioned.

- Claude: Well-structured response with clear, easy-to-read points, lacking external sources. It also posed a follow-up question.

- Gemini: Stated it couldn’t offer specific advice but outlined key factors to consider when choosing a mattress for back pain.

- Perplexity: Provided a detailed bullet-pointed response, cited trusted sources, and included related searches.

- DeepSeek AI: Offered a basic explanation with pros and cons but lacked depth.

How often should you replace a mattress?

- Google: AI-generated overview with sourced information from health and sleep organisations.

- ChatGPT: Provided practical guidelines but lacked authoritative citations.

- Claude: Very brief response, followed by a prompt asking if I wanted further elaboration.

- Gemini: Appeared similar to Google’s AI-generated overview.

- Perplexity: Concise but supported by trusted sources. Included a conclusion that effectively summarised the key points.

- DeepSeek AI: Offered general industry standards and different replacement timeframes based on mattress types.

What are the pros and cons of memory foam mattresses?

- Google: Listed pros and cons with some authoritative sources.

- ChatGPT: Relied mainly on a single source. The response was easy to read but lacked variety in citations.

- Claude: Effectively listed pros and cons but did not include references.

- Gemini: Provided a pros and cons list but lacked authoritative sources.

- Perplexity: Presented a bullet-pointed list with five pros and cons, making it easy to read.

- DeepSeek AI: Basic comparison—clear but lacking depth and detail.

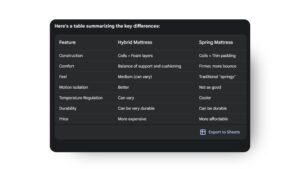

How do hybrid mattresses compare to spring mattresses?

- Google: Provided a well-cited and easy-to-read response.

- ChatGPT: Similar to Perplexity in structure and content.

- Claude: Offered a balanced, structured comparison, including price considerations, but lacked references.

- Gemini: Created a comparison table to visualise key features, included trusted sources, and provided a strong general overview.

- Perplexity: Delivered a brief overview, making it easy to read, but ultimately left the decision to personal preference. Sources lacked authority.

- DeepSeek AI: No sources cited. The response felt repetitive and lacked structure, leaving key questions unanswered.

Analysing the Final Prompt: Why Did Some AI Models Stand Out More Than Others?

Let’s explore the last prompt…

Google: Adopts a community-driven approach, incorporating diverse perspectives through videos, forums, and discussions. This adds variety and real-world context to responses but may feel overwhelming for users seeking a concise answer.

Gemini: The use of a table format is a standout feature, presenting information in a structured, easy-to-digest manner that enhances readability and comparison.

Perplexity: Groups answers by key points and supports them with credible sources, adding authority to its responses. However, the formal and structured tone may feel less engaging for some users.

ChatGPT: Breaks down points individually, providing clear explanations in a familiar format. However, it lacks the visual structure that some other models offer.

Claude: Similar in approach to other models but lacks citations, which could reduce the perceived reliability of the information.

DeepSeek AI: The absence of trusted sources and a repetitive structure makes the responses feel less engaging. Greater conciseness and varied formatting could improve clarity and usability.

Conclusion: AI vs Google – Which Delivers the Best Answers?

Our analysis evaluated six AI models alongside Google, assessing their depth of information, clarity, authority, and overall usefulness. Based on these criteria, each platform was scored out of 10.

Top Performers:

Google (10/10) – The gold standard for search. Google delivers the most comprehensive, authoritative, and user-friendly results, backed by clear citations, structured SERPs, and additional search features such as “People Also Ask” .

Perplexity (9/10) – A strong AI contender, particularly in sourcing and readability. Perplexity structures information well, with clear citations, but still lags slightly behind Google in overall authority.

Mid-Tier Models:

Gemini (8.5/10) – Excels in formatting, often presenting key takeaways in tables or structured points. However, the authority of its sources is inconsistent.

ChatGPT (8/10) – Delivers well-structured, easy-to-understand responses but often lacks authoritative citations, making it less reliable for users seeking in-depth, well-sourced insights.

Claude (7.5/10) – Provides balanced, conversational responses but lacks citations and follow-up depth, which impacts its credibility.

Lowest Performer:

DeepSeek AI (5.5/10): While it covers basic information, its responses are often shallow and repetitive and rarely cite authoritative sources. Users may need to conduct additional research to verify and expand on its answers.

Overall Conclusion:

While AI-powered assistants evolve rapidly, Google remains the most reliable and authoritative source for detailed, well-cited information. Its ability to pull from trusted domains, provide structured results, and offer interactive search features makes it unparalleled in delivering comprehensive insights.

That said, Perplexity and Gemini show promising advancements in AI-driven search, particularly in structuring responses and citing sources. ChatGPT and Claude offer clarity and readability but lack authoritative backing, making them better suited for quick, conversational answers rather than research-driven queries. DeepSeek AI, however, has significant room for improvement, particularly in sourcing and content depth.

As AI search capabilities continue to evolve, hybrid models integrating Google’s authority with AI’s conversational efficiency may redefine the way users seek and consume information in the future.

P.S. Here are 5 things you may like:

- Get a free audit of your marketing strategy. >>Click here to get your free audit<<

- We’re credited with the best large search campaign of 2023 >>Click here to read the case study of our work with dreams <<

- What does the future of search look like? >>The Future of SEO and Search<<

- AI Search Thought piece >>How Google’s Search Generative Experience (SGE) Impacts Featured Snippets <<